Automatic Keyframe Selection for RGB-D SLAM

Original title in Japanese publication:

林琨,佐藤雄隆,岩田健司:RGB-Dカメラを用いたSLAMにおけるキーフレーム選択に関する考察,ビジョン技術の実利用ワークショップ (ViEW2014)講演論文集,CD-ROM,2014

Abstract

In recent years, as the affordable RGB-D cameas have become more and more popular, the SLAM problem has also brought researchers’ attention. However, if the estimation is done in a consectutive manner, which means estimate the motion using every frame pair of time t and t + 1, the estimation error accumulates and it will introduce a large geometric distortion after a number of frames. Meanwhile, since all of the frames are being used, it’s also challenging to store all of these frame data and perform optimization or meshing. In this research, in order to tackle the problems mentioned above, we propose a novel approach for automatically choosing keyframes based on the inlier distribution. In this way, we reduced the accumulated error and also the number of frames we need to store, since only the a small portion of keyframes are chosen.

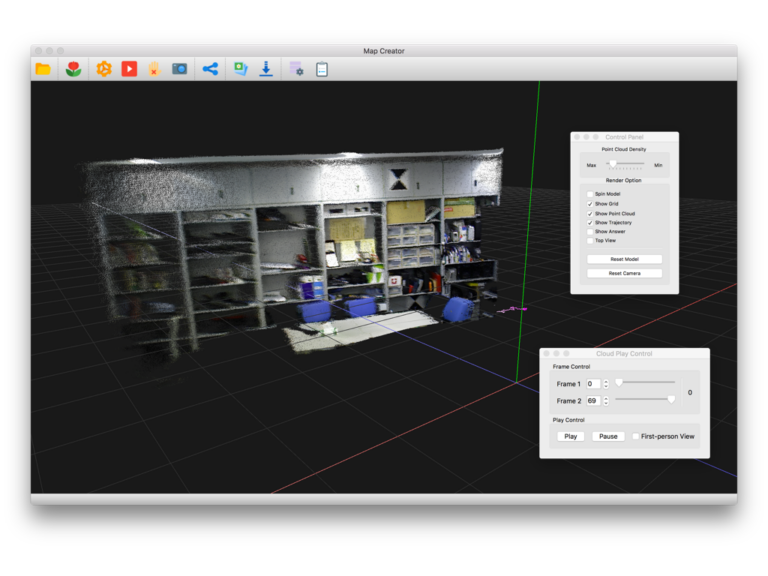

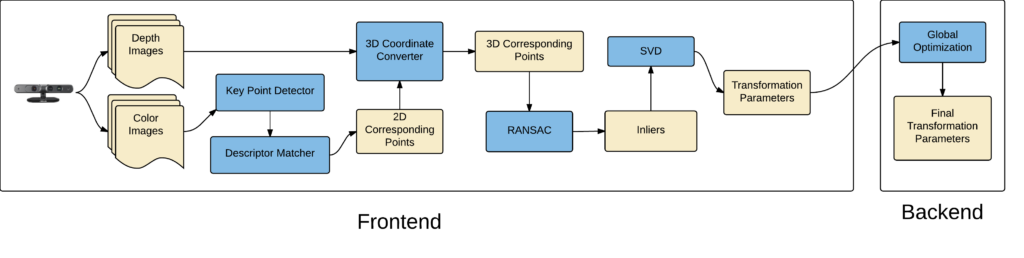

Existing Approach

A typical flow of the RGB-D SLAM process is illustrated in the diagram above. The basically idea is to exploit the fact that a depth camera like Asus Xtion or Microsoft Kinect can provide calibrated color and depth image pair, once we can find good correspondences in the 2D features, we can easily find their counterparts in the depth image and obtain their 3D locations. Then the rigid motion computation is straight forward.

Finding Correspondences in 2D

In this work, we use classical keypoints and its corresponding descriptors such as SIFT and SURF. Bidirectional matching is used such that we can only retain the good keypoints and their matches.

Rigid Motion Computation

After finding the 2D correspondences in two frams, we obtain the matched 3D points by for free thanks to the depth cametas we are using. For computing the rigid motion between two sets of matched 3D points, we need to find the best homogeneous transformation matrix given by

where is the

-th point in the first frame, and

is the

-th in the y frame. This is a well-known problem with a closed form solution given by doing a SVD on the covariance matrix of the two point sets.

However, naively apply this to the 2 point sets usually will not produce the ideal solution due to mismatches in 2D and noise/outliers in the point cloud from the camera. Here, we apply a RANSAC technique for estimating the rigid motion.

Bundle Adjustments

Here by tracking the 2D features using methods like optical flow, we can also obtain the traces of 3D points. By reprojecting the same 3D points back to the frames that observed them, we can construct a minimization problem to minimizing the reprojection error. Here, we are using the well-known non-linear optimization method call Levenberg-Marquardt method.

Loop Closure

When we detect that the camera is visiting the same place again, we could compute the motion between thesee two frames, and correct the accumulated errors so far by distributing them into all os the frames so far. There are multiple ways for detecting revisit detection, such as random sampling or BoW(bag of visual words). Here we are using random sampling approach. Then we can run bundle adjustment again for error distribution.

Issues with Existing Approach

As mentioned in the abtract, the accumulated estimation errors are inevitable. If one estimates the camera trajectory for a large number of frames, the drift in camera poses are significant. Not to mention that the data we need to store could also be intractable. Thus we propose an automatic keyframe selection to alleviate this problem.

Keyframe Selection

Keyframe Criteria

Here we introduce a noval simple but effective technique for automatically selecting the frames to reduce the accumulated estimation errors. We observe that the as the camera is moving, the overlapping area in the camera is smaller and smaller, and will gradually start to form a pattern. Namely, if the movement between the -th frame and the 1st frame is large enough, the correspondence between these two frames would form a pattern. Since the 1st frame by default is selected as a key frame, we then select this

-th frame as the 2nd keyframe. This keyframe will be our new reference frame and we repeat the process. The way how a keyframe is by doing a PCA analysis on the matched 2D keypoints in the color image, and examine the ratio of the components and their absolute values.

Long and Narrow Ellipse

When the camera is translating and/or rotating horizontally, the distribution of the matches keypoints would theoratically become a long and narrow ellipse. When it’s close to this form, even close to linear, we conclude that the camera has been moved far enough and it’s a good chance to choose the current frame as the new keyframe. Here we use an empirical threshold of 0.85 which represents the ratio between the first and the second pricipal components.

Small Span in All Directions

When the camera is translating in the same direction as the optical axis, the keypoints are roughly equally spanning in both x and y direction. However the variance in both direction will be smaller and smaller. Thus we determine the the camera has travelled far enough and it’s a good chance to choose the current frame as the new keyframe.

Results

The tested approaches are 1) use all frames 2) use a fixed step size for skipping frames 3) proposed keyframe selection.

Static Test

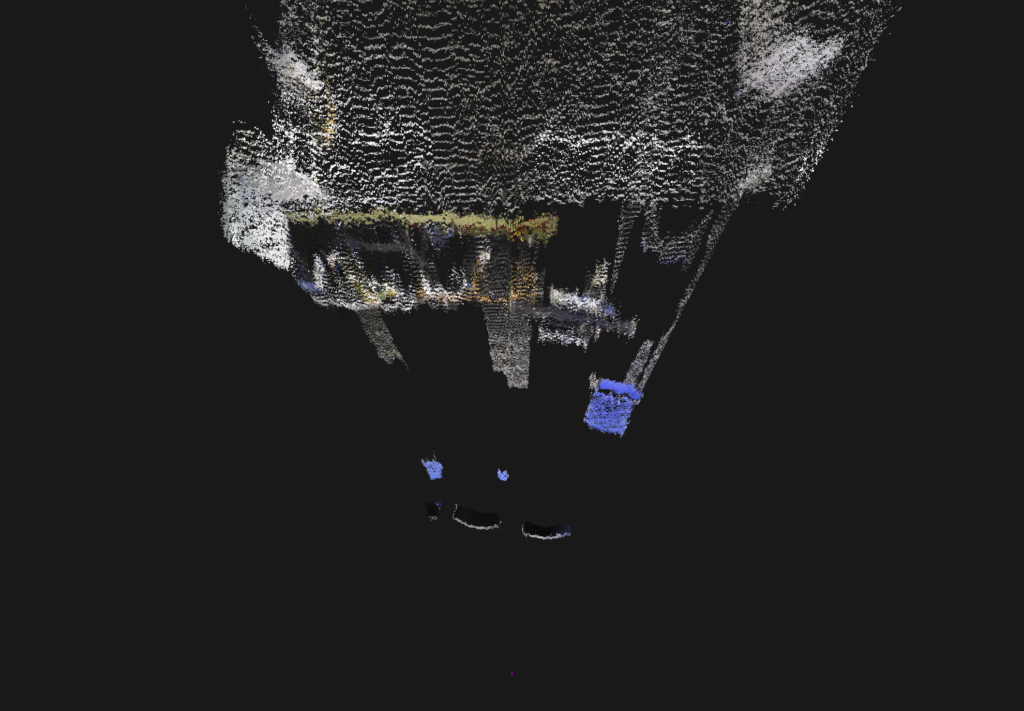

Here since the step size is a parameter, for the sake of simplicity we cheat by setting the step size such that it only pick the first and last frame. Only can clear see that all-frame approach is blurry due to accumulated errors.

| All frames | Fixed skpping step size / PCA-based keyframe selection | |

| Number of frames used | 736 | 2 |

| Rough accumulated position error (meters) | 0.1129 | 0.0096 |

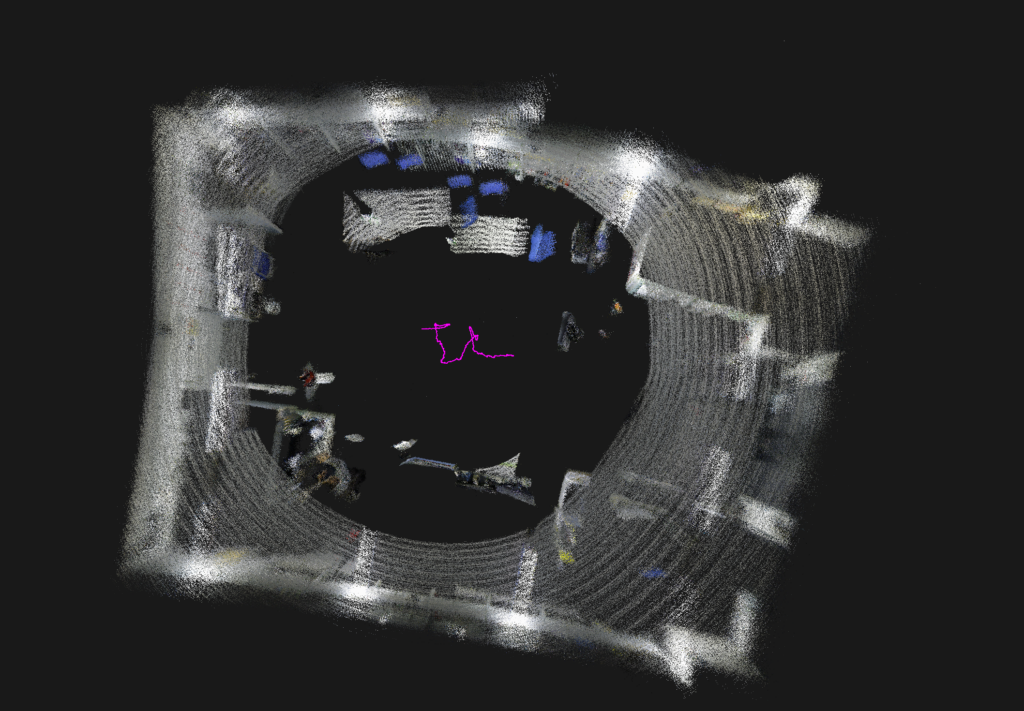

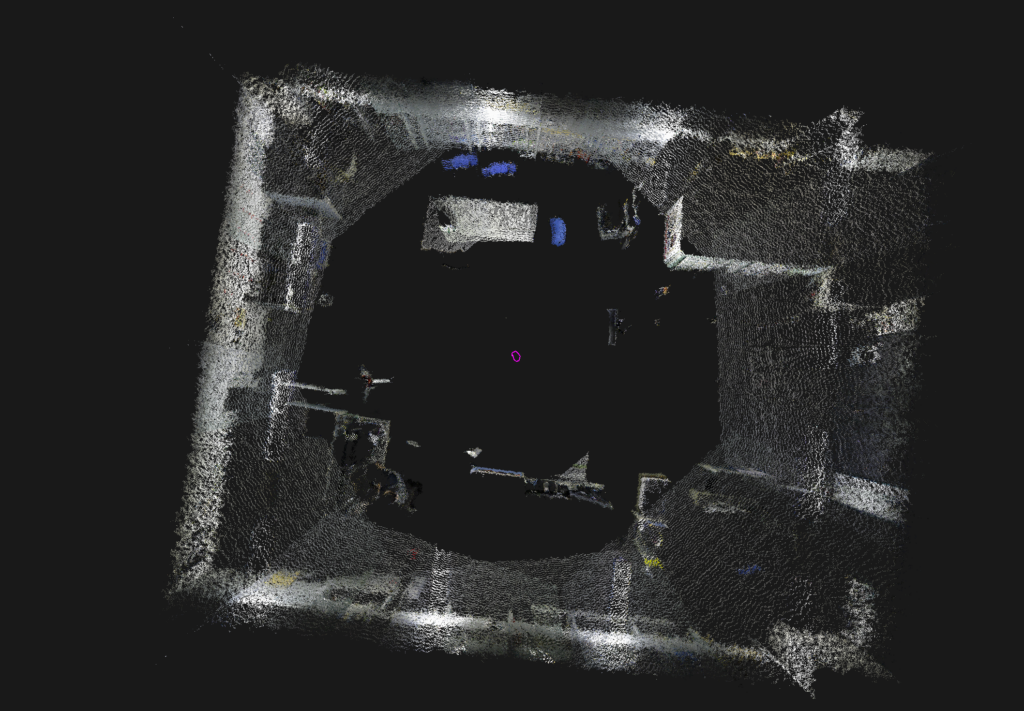

360 Degrees Rotation Test

Since the 2D features are rich in this particular room, the 2nd and 3rd approaches are visually equally correct. However we can see that the all frame one is distorted after a full rotation.

| All frames | Fixed skipping step size | PCA-based keyframe selection | |

| Number of frames used | 1270 | 11 | 11 |

| Rough accumulated position error (meters) | 1.65 | 0.25 | 0.28 |

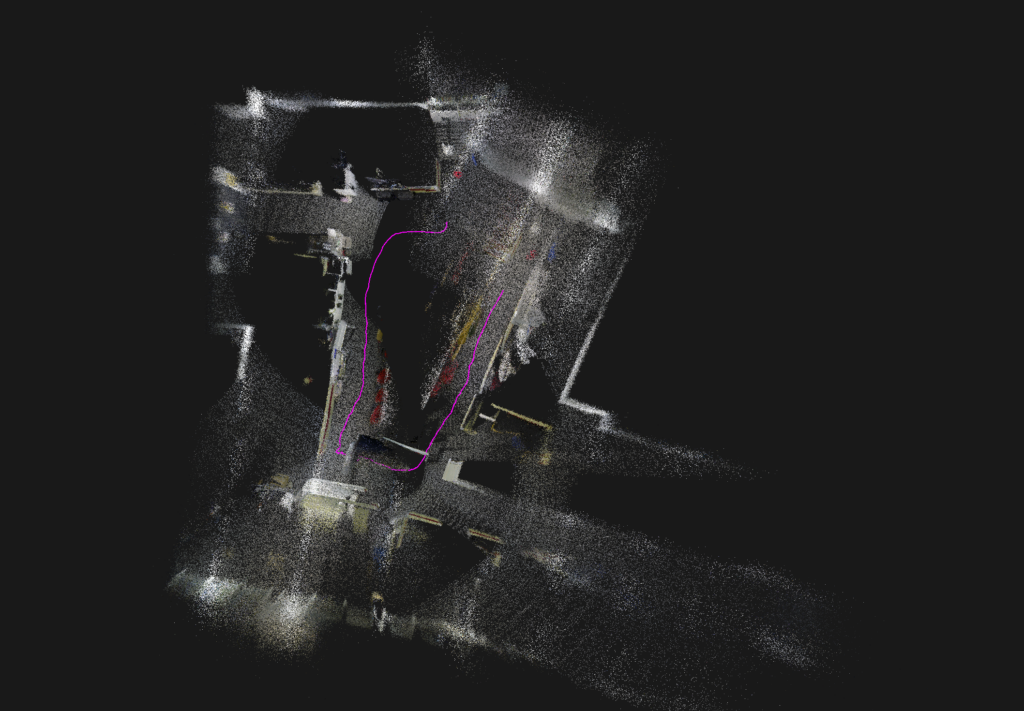

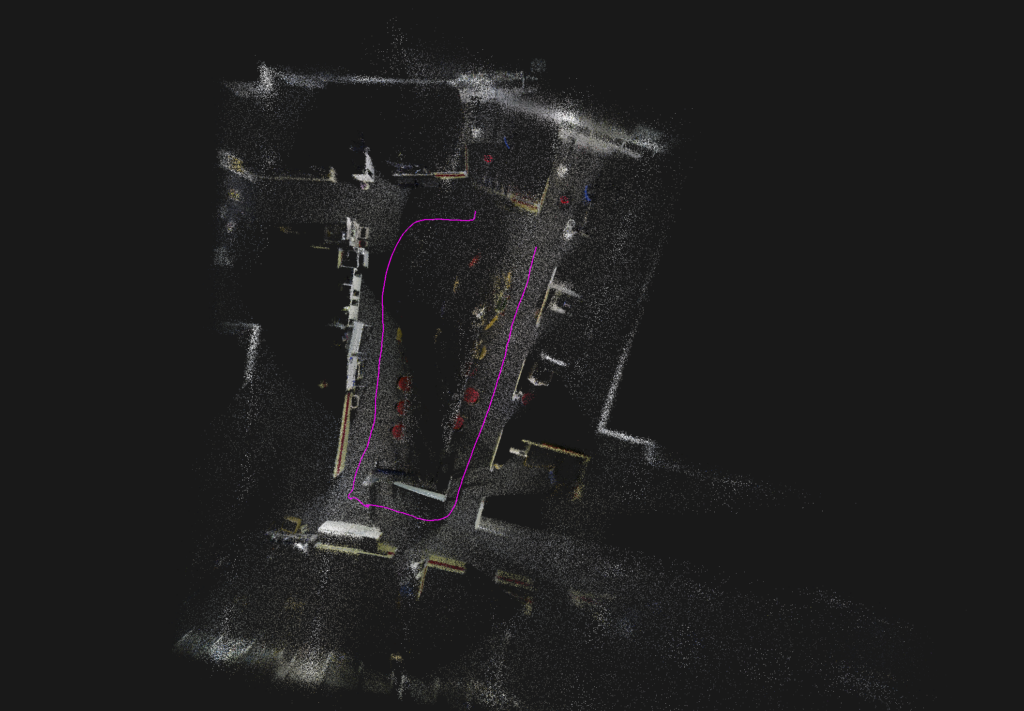

Rotation Translation Test

Here we can clearly see that since the fixed skipping step size is not exploiting the statistical properties of the data, it’s better than all-frame approach. However the PCA-based keyframe selection can produce the best result among these three.

| All frames | Fixed skipping step size | PCA-based keyframe selection | |

| Number of frames used | 2387 | 342 | 374 |

| Rough accumulated position error (meters) | 3.15 | 2.16 | 0.88 |

The fixed step size for the 2nd approach is set in the way that it can complete this task.However it’s very hard tweak this parameter such that it will work on any scenario. On the other hand it also showed that even it used the least number of frames, it did not produce the best result for the reason mentioned in previous sections.

Conclusion

In this work, we discussed about the general RGB-D SLAM process, and the issues exists in the current approaches. We introduced a PCA-based automatic keyframe selection technique for resolving the accumulated error and the storage consumption issue. Lastly we run rests on three scenarios to prove the effectiveness of the proposed approach.